Haptic Sound Machine

tl;dr

Haptic Sound Machine

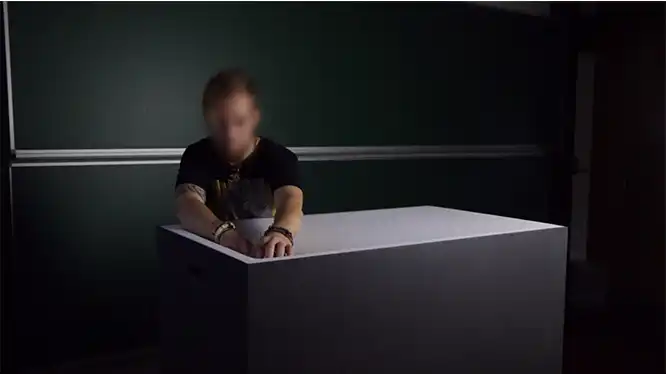

With the haptic sound machine, we wanted people to enjoy interacting with sound through gestures and haptics in playful and easy way, without any kind of background knowledge. There is no right or wrong, only immediate, synchronous feedback. We focus on the experience itself rather than on creating harmonious sounds. Using fabric in this unusual context enables a close relationship between movement, sonority and the sense of touch so that the haptical vocabulary which one needs to play an instrument is minimal.

Prototyping

About

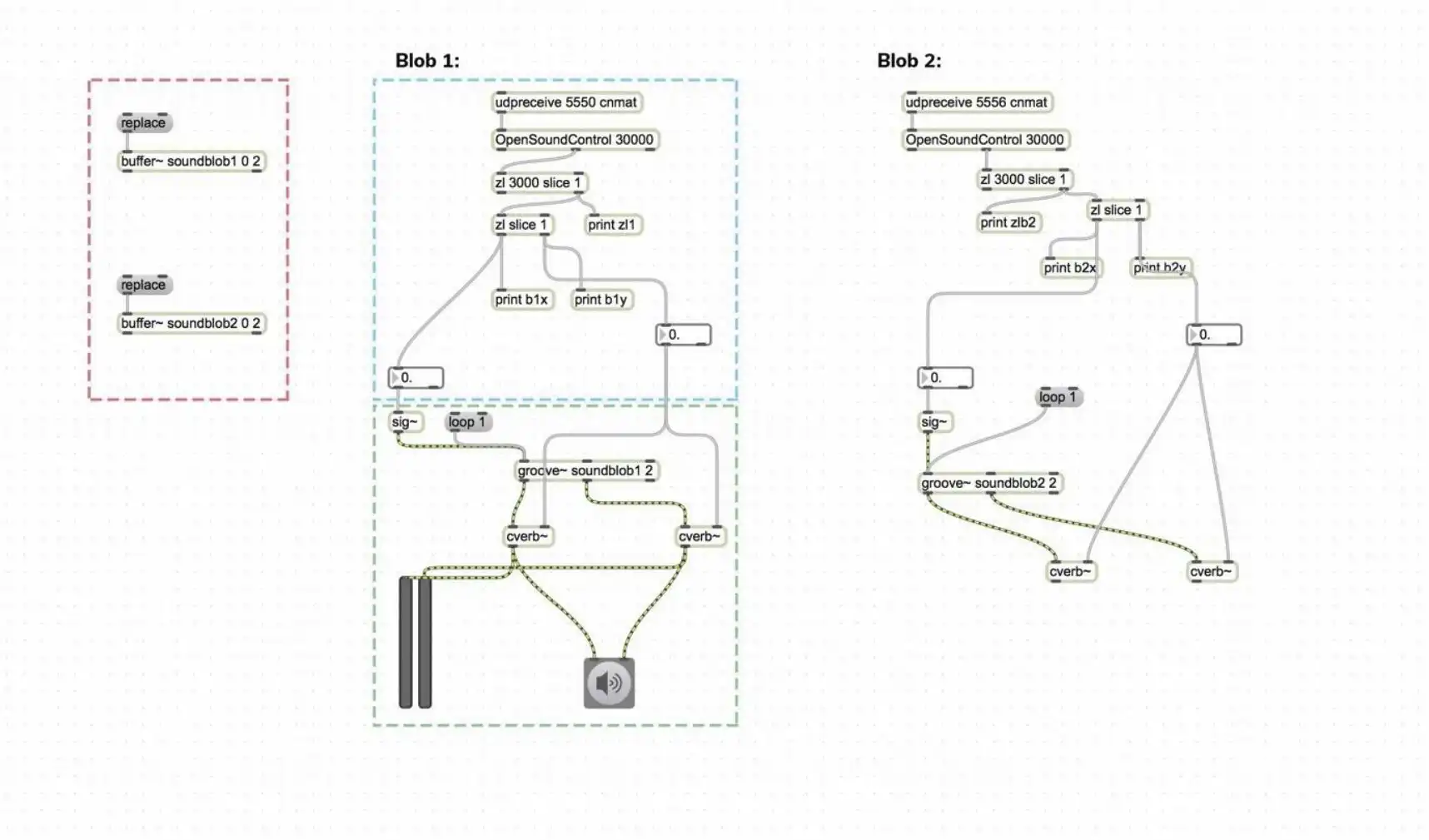

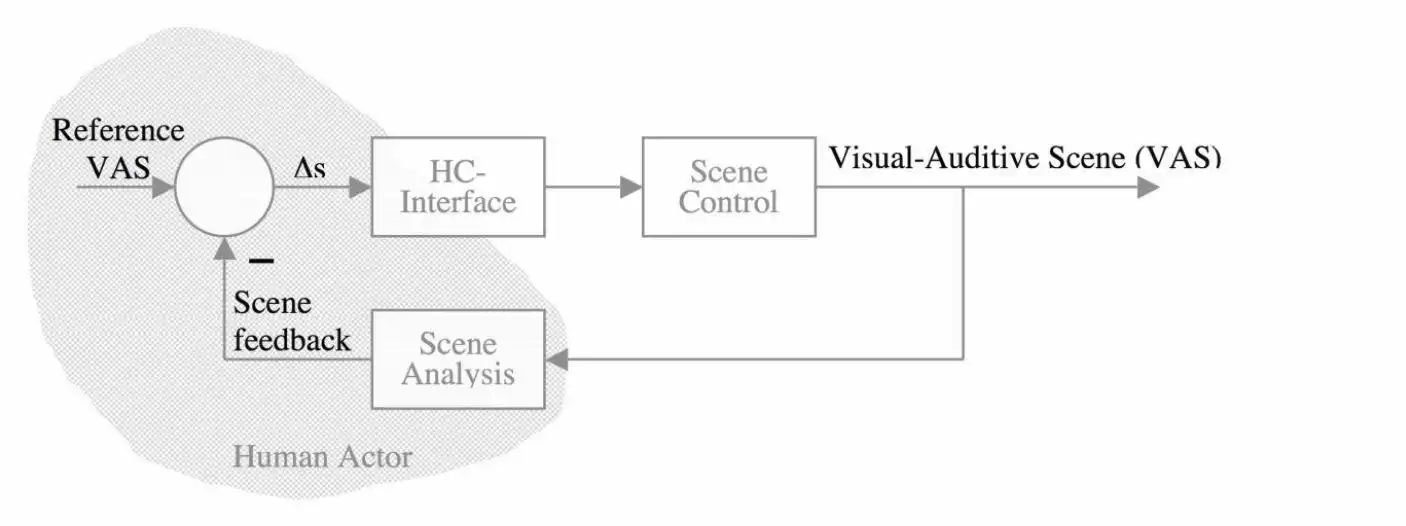

The haptic sound machine is made of a wooden box with fabric tightened on a detachable top layer. The heart of the machine (on the inside) is a depth camera (in our case it was a microsoft kinect), connected to a Macbook pro. We used processing and MAX/MSP to process the signals coming from the depth camera to modulating the sound. The graphic shown below (fetzner, friedmann 2007) visualizes the interaction between the user and the sound machine.

reference visual auditive scene as seen from the user’s perspective

the actual situation

hc interface

the fabric in connection with the depth camera

scene control

code within Processing IDE, communication between processing and MAX/MSP patches combined with data from the Kinect

scene analysis

the user analysis the result (feedback) of their interaction with the interface and the therefrom resulting scene. They deduct Δs from their reference vas and the actually resulting vas.

Δs

based on our installation, δs describes the amount of experience gained from using the machine iteratively. Given the immediate feedback time of the HSM and the resulting experience gained, the declining Δs leads to a raise of immersiveness.

Technical Overview

As mentioned in the first description, the HSM was realized using a depth camera, Processing and max MSP.

Processing

Processing was responsible for retrieving data from the Kinect (v1), packaging it in blobs and evaluating them accordingly to resolve the x and y position of the respective blobs it then sends the data via open sound control (osc) to max. we used oscp5 for UPD / OSC data transmission, Simpleopenni to receive Kinect data and blobdetection, which detects blobs.