Haptocube

tl;dr

Haptocube

Virtual Realities are closer to real life than ever before. Immersive environments facilitate unique experiences: from phobia-therapies to peerless ways of communication, virtual realities already comply with these requirements. Todays’ challenges, and the focus of my work is, how to integrate classical, well known haptic feedback into those mixed / substituted / virtual realities and how one can interpret them from this new point of view. So the main questions I want to ask right now are:

- Do you feel what you see or is it vice versa?

- Can you mislead users to think of a different material in VR as the actual material they feel in their hand

- Are there materials that map to several textures in VR? i.e. can aluminium be used to simulate a glass texture in VR?

Setup

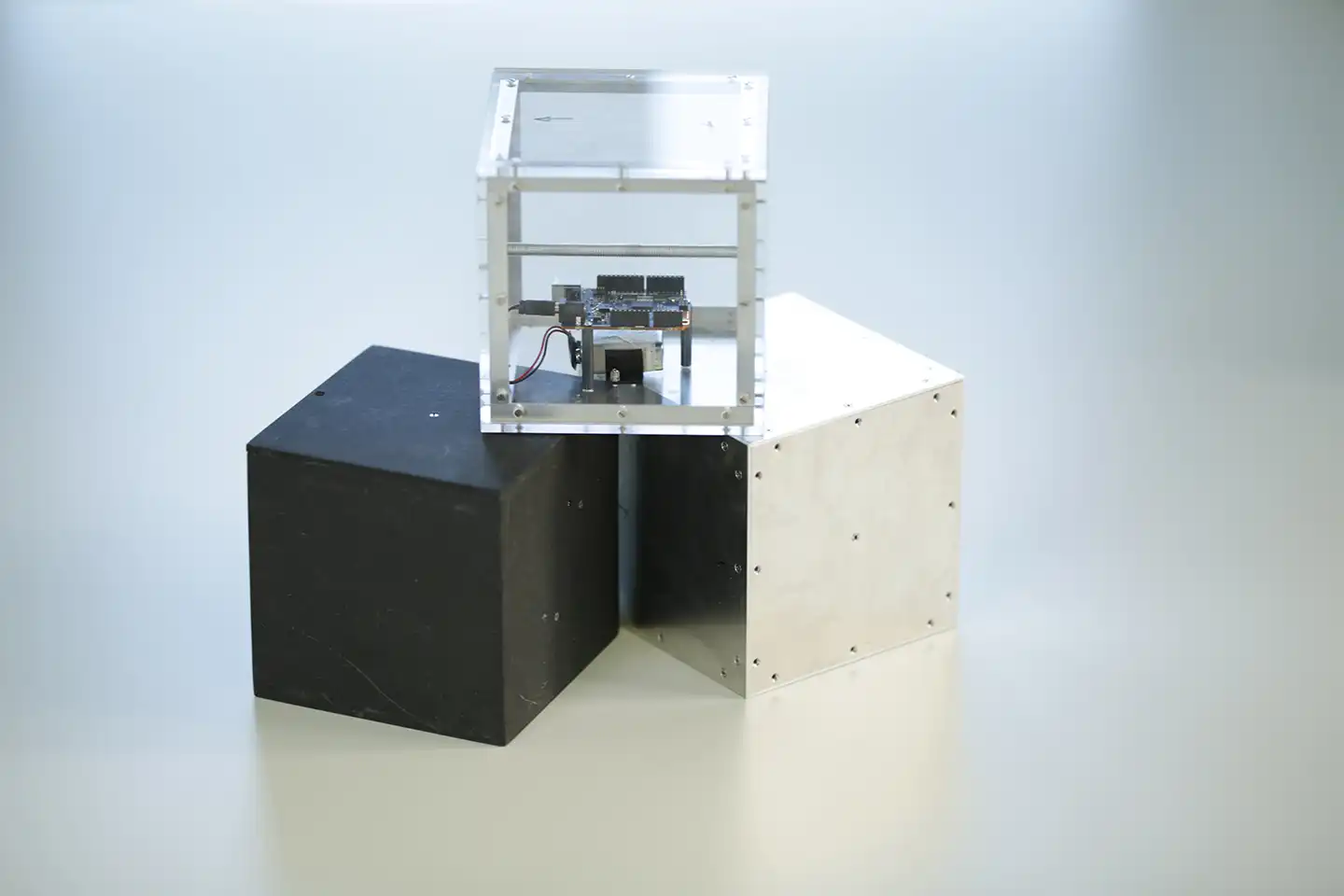

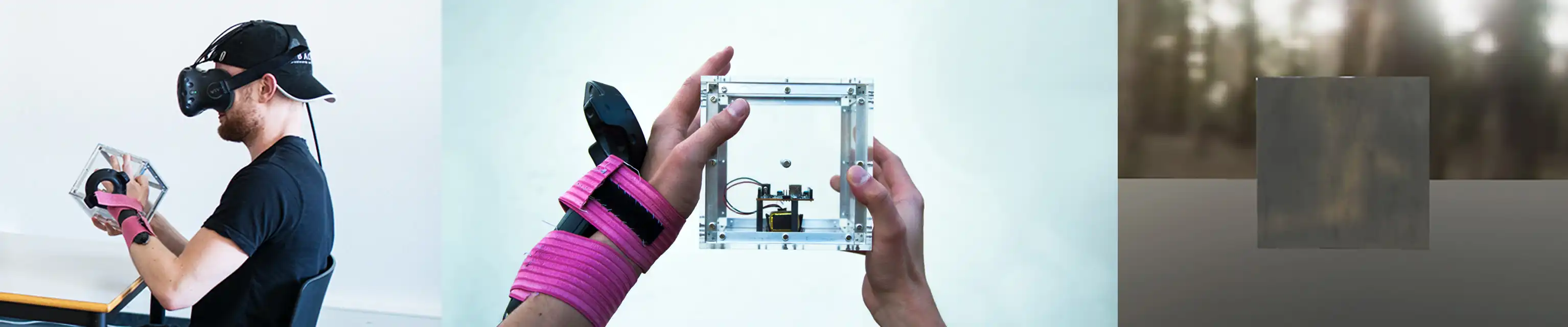

The basic idea of my experiment is, to build my own peripheral devices which can be mapped within a virtual space to determine the possibility of substituting haptic sensations within the virtual reality. To portray a large spectrum of haptic sensations, I built three different cubes. Cube 1 is made of standard wooden MDF plates, cube 2 is built out of aluminium and cube 3 is made from pmma (also known as acrylic glass) with the dimension of 150mm x 150mm x 150mm. to ensure that all cubes have the same weight, the respective faces of the cubes vary in thickness, so that each of the cubes weights about 700g. Within the cube there is an Arduino board with a BLE chip, a gyro and an accelerometer to measure the cubes orientation and to send the data wireless to a computer. It is supplied by a standard 9 v batterie to let it work fully self-sufficient. To receive information about the relative orientation of the users arms to the cube, HTC Vive controllers are attached to them.

Award

ACM Symposium on Virtual Reality Software and Technology