Perceptive and Intuitive User Interfaces

Perceptive and Intuitive User Interfaces

is a forward-thinking lecture aimed at expanding students’ understanding of human-computer interaction beyond traditional mouse and keyboard methods. The course explores more natural and immersive interaction techniques such as gesture recognition, speech interfaces, and brain-computer interfaces. By embracing these advanced modalities, students will gain the knowledge and skills necessary to develop innovative and user-friendly interfaces that are at the forefront of technology, preparing them for the challenges and opportunities in the evolving landscape of user experience design.

Topics Covered:

Communication and Cognition

- Hick’s Law: The time it takes to make a decision increases with the number and complexity of choices.

- Preattentive Perception: The ability to quickly and effortlessly detect certain visual properties in a scene before focusing attention.

- Law of Proximity: Elements that are close to each other tend to be perceived as a group.

- Miller’s Law (The Magical Number Seven, Plus or Minus Two): The average number of objects an individual can hold in working memory is about 7 ± 2.

- Multimodality: The use of multiple sensory modalities (e.g., visual, auditory, tactile) to communicate or interact with a system.

- Isometric and Isotonic Input Systems:

- Isometric: Input devices that measure force or pressure without movement (e.g., force-sensitive joystick).

- Isotonic: Input devices that measure displacement or movement (e.g., standard joystick or mouse).

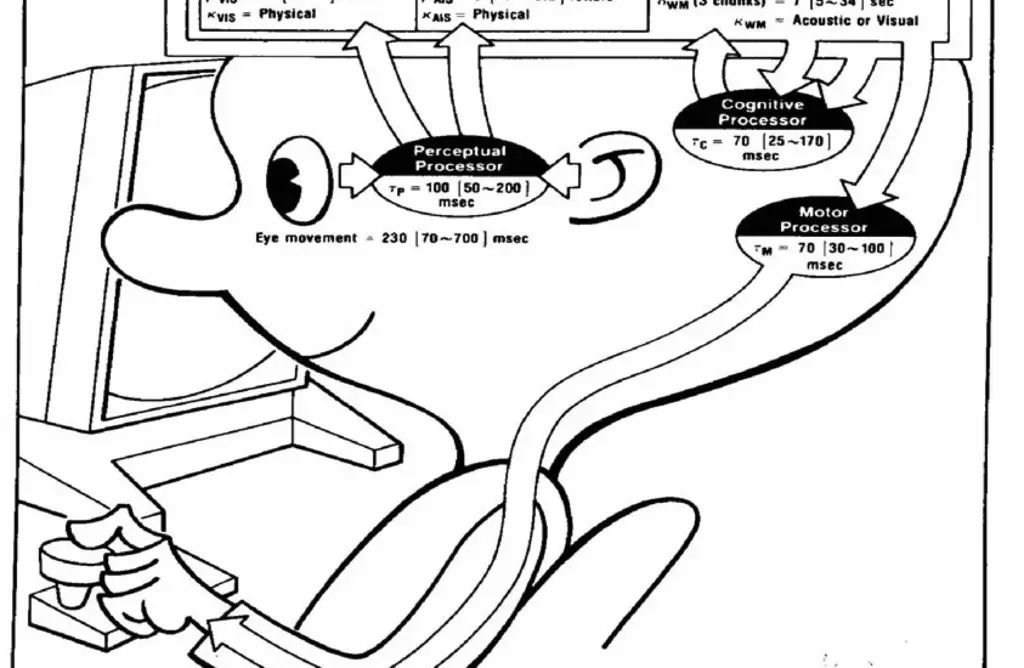

- Human Cognitive Cycle: The repetitive sequence of perception, decision-making, and action that drives human interaction with the environment.

- Characteristics of Intelligent Applications: Features that define smart applications, including adaptability, learning capabilities, context-awareness, and the ability to provide personalized user experiences.

Interaction Design

-

Interaction Design and Its Disciplines: The study of designing interactive digital products, environments, systems, and services, encompassing multiple disciplines like UX design, UI design, and more.

-

Human-Computer Interaction (HCI): The study and practice of how people interact with computers and to design technologies that let humans interact with computers in novel ways.

-

OSIT Model: A framework for understanding and designing communication processes in interaction design (e.g., Observation, System, Interaction, Task).

-

Interaction Paradigms: Different models or approaches for designing interactions, such as direct manipulation, immersive environments, or multimodal interaction.

-

Fitt’s Law: A predictive model of human movement primarily used in HCI to estimate the time required to move to and select a target area.

-

Steering Law: A law that predicts the time required to navigate through a path of a certain width, applied in interface design for tasks like dragging or moving objects through confined spaces.

-

Mapping: The relationship between controls and their effects in the real world, crucial for intuitive interface design.

-

Explicit and Implicit Interaction:

- Explicit Interaction: Direct, intentional actions by the user to interact with a system (e.g., clicking a button).

- Implicit Interaction: Unconscious, automatic interactions based on user behavior or context (e.g., a system adapting to a user’s habits).

-

Affordances: The perceived and actual properties of an object that determine how it could possibly be used, essential for intuitive design.

-

Calm Computing: A design approach that aims to create technology that blends seamlessly into the user’s life, minimizing distractions and cognitive load.

3D Interaction

-

Historical Development: A look at the evolution of 3D interaction technologies, including key milestones such as:

- Theremin: One of the first electronic musical instruments, controlled without physical contact.

- BubbleS: An early interactive system that used 3D space for interaction.

- Oblong Industries: Known for their development of spatial operating environments.

- Nintendo Wii: Revolutionized gaming with motion-sensing technology.

- Microsoft Kinect: Advanced gesture recognition and full-body tracking for gaming and other applications.

-

Proxemics (Cultural Aspects): The study of personal space and how distance and spatial relationships influence interaction, including cultural variations in spatial behavior.

-

Audience Funnel: A model describing how a system determines which user in a group it should focus on, often based on proximity, gestures, or other cues.

-

Focus: The strategies and mechanisms by which a system decides whose input or actions to prioritize in a multi-user environment.

-

Depth Cameras: An overview of different depth-sensing technologies and how they function:

- Stereo Vision: Uses two cameras to calculate depth by comparing two images.

- Time of Flight: Measures the time it takes for light to travel to an object and back to determine distance.

- Triangulation: Uses angles and distance from a known baseline to calculate depth.

- Light Field Cameras: Capture light from multiple angles, allowing depth to be inferred from a single shot.

-

Classification: The process of categorizing data into predefined classes based on features.

-

Bayes’ Theorem: A mathematical formula used for calculating conditional probabilities, foundational in many classification algorithms.

-

Bayes Classifier: A probabilistic model that applies Bayes’ Theorem to classify data based on likelihood estimates.

-

Bayesian Estimator (Bayes Schätzer): A statistical method that estimates the probability of an event based on prior knowledge and observed data.

-

Particle Filter: A method used for estimating the state of a system that changes over time, often used in object tracking and robotics.

-

Dynamic Time Warping: An algorithm for measuring similarity between two temporal sequences, which may vary in speed, commonly used in speech recognition and gesture analysis.

-

Difference Images: Techniques that compare frames in a video sequence to detect motion or changes in the scene.

-

Blob Extraction: The process of identifying and isolating regions in an image that are distinct from the background, often used in object recognition and tracking.

Conversational Interfaces

-

Components of a Conversational User Interface (CUI):

- Speech Recognition: The process of converting spoken language into text.

- Dialog Systems: Systems designed to manage a conversation between a user and a computer, often involving understanding user intents and managing the flow of interaction.

- Speech Synthesis: The artificial production of human speech, converting text into spoken words.

-

Turing Test and Chinese Room:

- Turing Test: A test proposed by Alan Turing to determine whether a machine can exhibit intelligent behavior indistinguishable from that of a human.

- Chinese Room: A thought experiment by John Searle that argues against the notion that a computer running a program can have a “mind” or “understand” language, even if it appears to.

-

Formants: The resonant frequencies of the vocal tract that shape the sound of speech, critical for distinguishing between different vowels and speech sounds.

-

Pronunciation Dictionaries (Aussprachewörterbücher): Resources that provide the correct pronunciation of words, often used in speech recognition and synthesis systems.

-

N-grams: Sequences of ’n’ items from a given sample of text or speech, commonly used in language modeling and predictive text systems.

-

Intents: The purpose or goal behind a user’s input in a conversational interface, which the system must recognize to respond appropriately.

-

Word Error Rate (WER): A common metric used to evaluate the performance of speech recognition systems, calculated as the number of errors divided by the total number of words.

Evaluation of interactive Systems

-

Why Do We Evaluate?: Evaluation helps in assessing the effectiveness, usability, and overall impact of a system or interface. It ensures that the design meets user needs, identifies areas for improvement, and validates research hypotheses.

-

Lab Study vs. Field Study:

- Lab Study: Controlled environment experiments where variables can be carefully managed.

- Field Study: Real-world testing where the system is evaluated in the environment it will be used, providing more naturalistic data.

-

How to Formulate Research Questions (Wie Formuliert Man Eine Forschungsfrage): Research questions should be clear, focused, and answerable. They guide the evaluation process by defining what is to be investigated and the objectives of the study.

-

Evaluation Techniques: Various methods to assess systems, including usability testing, surveys, interviews, and A/B testing, each providing different insights into user experience and system performance.

-

Physiological Evaluation Techniques: Methods that measure physical responses to evaluate interactions, such as:

- Heart Rate: Monitored to assess stress or cognitive load.

- Eye Tracking: Used to study where and how users look at a screen, indicating areas of interest or difficulty.

-

Sample Size (Stichprobe): The number of participants in a study, which should be sufficient to provide reliable data and represent the target population.

-

Between-Group vs. Within-Group Design:

- Between-Group Design: Different groups of participants are exposed to different conditions.

- Within-Group Design: The same group of participants is exposed to all conditions, allowing for comparison within the same subjects.

-

Effect Size (Effektstärke): A measure of the strength of the relationship between variables, indicating the magnitude of an observed effect or difference.

-

Systematic Errors (Systematische Fehler): Biases or consistent inaccuracies in measurement that affect the validity of results.

-

Random Errors (Zufällige Fehler): Unpredictable variations in measurement that occur by chance and can affect the precision of results.

Physical Computing

-

Arduino Platform: An open-source electronics platform based on easy-to-use hardware and software, ideal for creating interactive projects. It allows users to read inputs (e.g., light on a sensor) and turn them into outputs (e.g., activating a motor).

-

Sensors: Devices that detect and measure physical properties (such as temperature, light, or motion) and convert them into signals that can be read by the Arduino.

-

Actuators: Components that take electrical signals from the Arduino and convert them into physical action, such as moving a motor, turning on a light, or creating sound.

-

Series and Parallel Circuits (Reihenschaltung, Parallelschaltung):

- Series Circuit (Reihenschaltung): A circuit in which components are connected end-to-end, so the current flows through each component sequentially.

- Parallel Circuit (Parallelschaltung): A circuit in which components are connected across common points, providing multiple paths for the current to flow.

-

Pulse Width Modulation (PWM): A technique used to control the amount of power delivered to electrical devices by varying the width of the pulses in a pulse train, commonly used to control motor speed and LED brightness.