Perceptive and Intuitive User Interfaces

WS 22/23

Interactive Multiuser Installation

But it came with a twist: To make the outcome more interesting, it was necessary to implement some kind of knowledge dissonance in order to foster participant cooperation.

As usual, every student had to pitch one own idea at the beginning of the semester and based on that, groups were built.

One group had the idea to create use interactions, that by itself limits the information received. They set up a classic two 2 platformer game in which the virtual player was controller by blinking (eye blink tracking via mocap4face1) and blowing (anemometer using an Arduino2). This limited participant one’s sight when the character had to jump, and the other person could not use their voice because they were busy blowing into the anemometer. To turn directions, the two participants had to touch the others hand (also Arduino).

The idea behind project 2 was to lead people to guide a ship through a canyon while the person on the lookout was actually within immersive VR environment and the helmsman / navigator was placed in front of the 180° wall.

Regarding the dissonance in information, the person on the lookout saw obstacles the helmsman could not see, so they had to communicate with each other in order to guide through the canyon.

SS 22

Interactive Real-World Multiplayer

In this semester the goal was to create an interactive multiplayer experience (not necessarily a game) controlled by anything other than basic keyboard and mouse. The students used a multitude of different input/sensor devices such as

- HTC Vive Tracker

- HTC Vive Pro

- Microsoft Azure Kinect

- Anemometer

- Unreal Engine 4

- Unity

As a display, they chose a floor projection as well as the 180° screen.

Pirate License

In this project, you and your partner are in a driving school for pirates. One person has to steer from within the 180° Screen and the other, while in VR has to guide you. The person on the steering wheel had to also had to blow into an anemometer (controlled by an Arduino) to make the ship pick up the exact amount of speed.

Red Light Green Light

In the adoption of the famous “rotes Licht, grünes Licht” game, up to 4 participants had move incrementally in direction of the 180° screen as long as they were not seen by the virtual puppet. As soon as the puppet was turning its’ head, participants had to stop moving immediately. The movement was tracked using the HTC Vive Trackers all over the lab and was quite exact.

Balance It!

Here the students had the idea, to recreate a game known by many children. A “wooden labyrinth” - but not in a physical sense, but with floor-projection and body-movement / position (retrieved from Azure Kinect) as input.

WS 21 / 22

To topic for this semester was to create a prototypical interactive application for (semi-)public space on a large screen.

The students created a minority report like riddle game in which the participants had to watch and rearrange videos in order to identify the thief. They used Unity, the azure Kinect for gesture recognition and pose as well as a leap motion controller for detailed hand interaction.

Another group created a floor projection version of the game “the floor is lava”. They used Blender, Unity and two Azure Kinects in order to track the person on the floor.

WS 20 / 21

For the WS 20/21 the lab was closed due to corona pandemic, which led us to the topic on how to activate people in their home offices and increase communication.

In this semester we tried to lend as much hardware as possible to the students at home in order to give them the possibility to still tinker around with new hardware and learn how to use it. Yet, we did not want them to create teaser videos due to privacy issues.

In one project, students created a virtual pet on a Samsung Galaxy Smartwatch that gained its’ information about the persons’ posture from the Microsoft Kinect. If the person would not stand up for a while, the pet became sad and wanted to go for a walk.

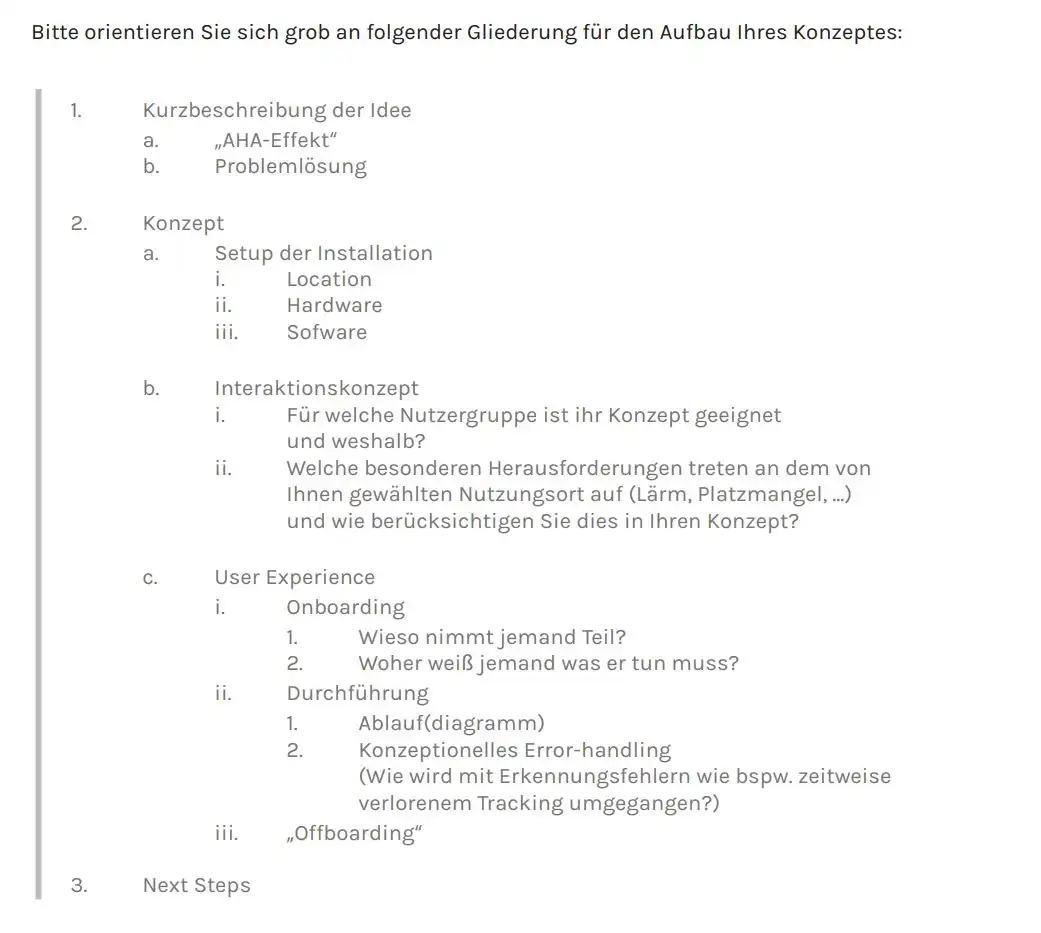

The students had to consider the following points in their projects:

Summer 20

Paper Prototyping with Arduino

In this semester the students had to do some “hands-on” work to create their own paper signals application.

Paper Signals is an experiment that explores how physical things can be controlled with voice in an easy and fun way.

Here is the official google teaser video: